Where it all begun

My homelab journey started from buying an old slim desktop computer and a 2TB hard drive. As a beginner, the OS of my choice was OpenMediaVault for its simplicity and easy to maintain. It worked for quite some time; however, as I gained more experience, I discovered Docker containers and open-source projects. At that time, I didn’t have a public IP or domain to make my self-hosted apps public, so everything was local. Mainly, I used my server as a shared drive, Plex server (that time it was still decent) and Home Assistant hub. With each service, the load grew higher and higher, leading to the high load of >700%. The old server had a small form factor so, there was no room to upgrade.

So unew server it is.

Server version 2.0

I bought a used set of server motherboard Supermicro X9SCM-F and Intel Xeon E3-1220 V2 CPU with a tower cooler.

As for the RAM, it gets tricky. It can only work with unbuffered ECC DDR3 memory that features error correction, which will be very handy in the future. Those were hard to buy, but one by one, I collected 4 sticks, 8GB each. To power server, I have found a 450W PSU.

To allow for the future growth of the server, I selected Chieftec Mesh case, that can fit 7 3.5″ or 2.5″ HDD with individual quick mount sleds.

Where do I store my data?

As for the hard disks, I reused my 480GB SSD and an old 2TB HDD with data to the new server.

I wanted to achieve disk redundancy, so I had to buy one more 2TB HDD.

Both HDDs work together in RAID 1 (redundant array of independent disks), which means that any data written to the storage is duplicated by two drives. Thanks to that, either of those two drives can die and no data is lost. Then, I simply replace faulty drive with the new one, rebuild the array (copy data from the other drive to the new one) and I’m good to go.

Continuing on the idea of redundancy:

Like many of the server boards, mine has some cool tricks up its sleeve.

First, it has not one, but three Ethernet ports (the one you would be using right now if Wi-Fi was not invented). Two of them are 1Gbit/s transfer speed, and about a third we’ll talk later (LINK). With some magic on both router and server side (Link Aggregation if you’re interested) and two Ethernet cables, I can unplug any of the cables and experience no interrupt in work. Not that I’d do this, but during vacation, offline server is the last thing I would want.

Backups, backups, backups…

Redundancy is good, but keep in mind that it is not a backup.

One of the most popular strategies to backup is the 3-2-1 backup rule. It advises that you have to keep 3 copies of your data, 2 of them on different storage, and 1 of them off-site in a remote location.

That’s how I ended up buying one more 4TB HDD drive for backups. Add to that one more 2TB HDD drive for family photos and other stuff, and 120GB SSD for experiments, and a total is 6 drives in the server.

What about off-site backup?

Initially, I was planning to back up twice a year to cloud storage. I even decided to go with Blackblaze as they provided cheap but reliable storage. The downside to this method was a need to pay monthly. But I’m working on finding alternatives.

Hardware features

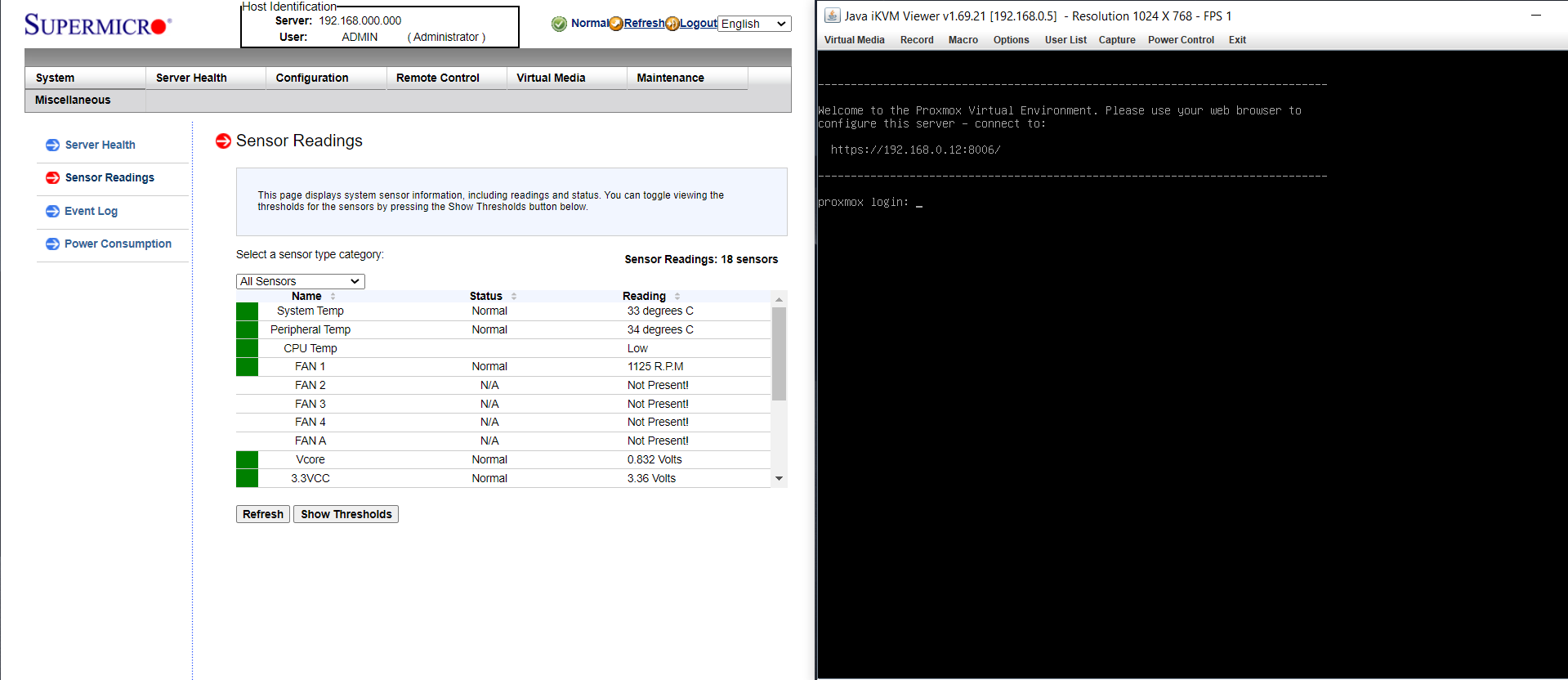

Coming back to the third port of the server. It is a management port to an IPMI.

You can imagine it as a small computer within the server (because it is) that runs 25/8 (assuming server works 24/7) even if the server is off or corrupted. It runs a small webserver that allows to remotely (!) check sensor data, reboot, and power on the server, control, and even mount virtual drive with OS installer. That feature saved me a lot of times and continues to do it.

As you could see from the photo of motherboard above, it has a total of 6 SATA ports to connect disks. But only 2 of them are high speed (6Gb/s), and the rest are half of that. This and problem with disk virtualiation, caused me to buy LSI PCI card that has 2 cool ports that multiply to 4 SATA port.

Software

As a host system I’m using Proxmox hypervisor. In its core there is a system designed to use minimal resources to work, and provide convinient way to manage VMs (Virtual Machines). You can imagine it as splitting hardware resources between N number of VMs, with each one running its own OS.

TrueNAS

One of the VM is running TrueNAS. This is a storage operating system designed mostly for NAS (Network Attached Storage). Main feature is that it uses OpenZFS filesystem. Now, you may know filesystems, like NTFS, FAT32, and others, but this one has a lot of advanced functionality.

Realtime compression

Imagine like you have everything archived in zip file, but it works like usual. Every single file is compressed automatically, and you see no difference. But for text files, for example difference in file fize can be huge.

Checksums

Idea is that each file is checked if it is valid, decreasing number of random issues, corrupt files, or failing drive.

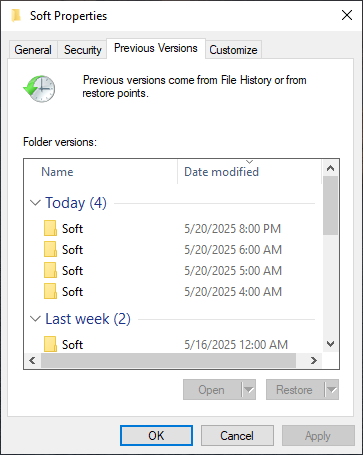

Snapshots

Probably the coolest feature you can get. You can make them every hour or even minute. They don’t cost you anything and you can make them as much as you want. They only contain files if they were changed afterwards. So if something went wrong, or you deleted very important file, you can recover it in a matter of seconds.